Smart LQA — A New Level of Linguistic Quality Assessment

Quality control, as a component of quality management, is required to maintain and continuously improve the quality of services provided to clients. In a BANI world where unpredictability is the norm, automating linguistic quality assessment has become critical. To minimize manual effort, it is necessary to leverage machine learning algorithms and artificial intelligence to analyze data from previous assessments and automatically initiate a linguistic quality assessment.

Linguistic services are at the core of Janus Worldwide’s business value, so the idea of creating a quality assessment tool has been brewing for a long time. And we seem to have fully succeeded in bringing it to life.

Before Smart LQA, assessments were conducted outside of a unified information system, and the initiation of an LQA project relied on the expert judgment of the quality assurance team. And while our team members are all amazing professionals who do a great job, their workflow was reactive, not proactive. It was difficult to quickly analyze the quality vector, up or down, for a client, translator, or language pair. Even gathering information on a single client required piecing together disparate data.

The introduction of Smart LQA changed all that. It turned out to be a very advanced solution, where machine learning algorithms for data processing, combined with accumulated assessment results, effectively represent a type of AI-driven linguistic quality management. Another benefit is the shift in the nature of LQA work from a reactive to a proactive approach. Based on the accumulated data, the Smart LQA system automatically initiates linguistic quality checks at the appropriate frequency based on predefined conditions.

The system architecture consists of three blocks, or modules. In the current version, all modules work with a single database, but Smart LQA is designed to integrate with different data storage systems if the client’s IT infrastructure separates operational and analytical (BI) systems due to large volumes of data.

Module 1 – Triggers

This is the configuration module — the same module that is responsible for initiating linguistic quality checks based on specific parameters. It provides a set of highly customizable conditions (we call them triggers) that determine when a quality check should be initiated. Triggers can be a new client or language pair, reaching the maximum allowed time since the last check, or reaching a specified volume of translated text within a project. The results of previous checks are also factored in, dynamically adjusting the frequency of future checks based on past performance.

Module 2 – Operations

Unfortunately, it is impossible to avoid manual work in this process. Assignments within this module require detailed instructions on how to complete the LQA task — this is where (I’ll reveal our secrets shortly) we plan to use AI to quickly generate these assignments.

This module also tracks completed assessments, compiles historical data, and calculates final scores. Importantly, there is a post-assessment arbitration process to ensure objectivity. All of this has been successfully implemented in this module, and now users with a humanities background, who tend toward to a less systematic but more creative work style, have the opportunity to more fully realize their creative potential, and the program itself will provide the necessary systematic features. We can distinguish four key stages in the assessment process:

- Linguistic service assessment

- Review of the results by the linguistic service provider

- Arbitration stage, if the provider disputes the score

- Final score adjustment based on arbitration results

Previously, these four stages required multiple stakeholders, resulting in lengthy approvals and extended timelines. As a result, the final deadline was often pushed back. Quality and efficiency depended heavily on stakeholder engagement (here, remember the realities of the BANI world).

So we turned the very interactions between LQA stakeholders into a structured business workflow within the Operations module. Each stage generates a task with a set deadline and notifies the responsible party, streamlining operations, improving deadline predictability, and reducing analysis and processing time!

It should be separately noted that while AI is making remarkable progress in translation evaluation, there are still human experts involved who need a modern tool for their work.

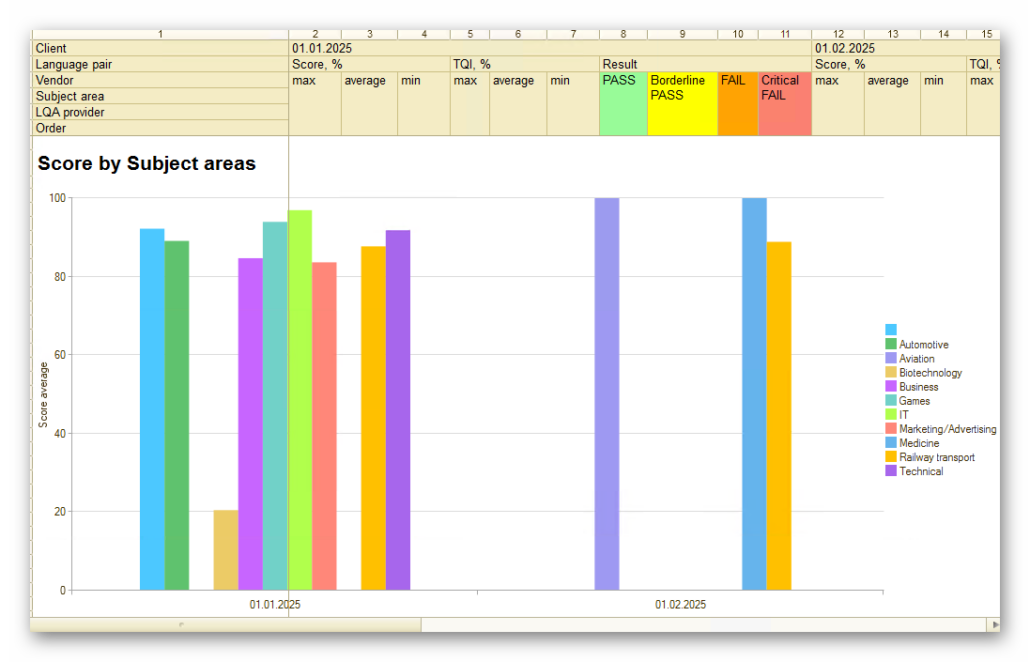

Module 3 – Reporting

This module is the most important part in terms of data visualization and LQA analysis. Previously, assessment data existed in disparate fragments for each check. Now, the reporting system can collect data across desired timeframes and parameters (such as clients, language pairs, and vendors), delivering results in minimal time. There is no longer any need to manually compile data from multiple files into one large Excel spreadsheet.

And, of course, the Smart LQA project team has other exciting ideas in the works.