AI in the translation business: beyond MT

Introduction

The idea of this article is to identify and briefly describe areas in the translation business that may benefit from implementation of AI and particularly ML. Since AI is not completely predictable, implementation of any kind of AI also brings new risks. So, the result of either introduction of AI or replacement of good old algorithmic automation with an AI may produce an unexpected impact. Or a positive breakthrough ?

In linguistics, we have been seeing a continuous evolution of Machine Translation – the most evident example of ML, and we already know what to expect from it, what the benefits are, and how to address the risks. How we manage it also presents a great potential for applying ML to make improvements. This includes automated decision-making based on acquired data to either provide human workers with pointers or to eliminate them in order to resolve bottlenecks in Continuous Development/Continuous Translation. Or for making predictions and bringing to light potential risks (of missing a deadline, losing a client, etc.) and maybe to propose corrective measures.

As there are different areas to which an ML technique could be applied and different learning approaches (supervised or unsupervised learning, reinforcement learning, transfer learning, etc.), a search of the best combinations is an interesting journey that we would like to follow.

First steps: ML-powered vendor suggestion

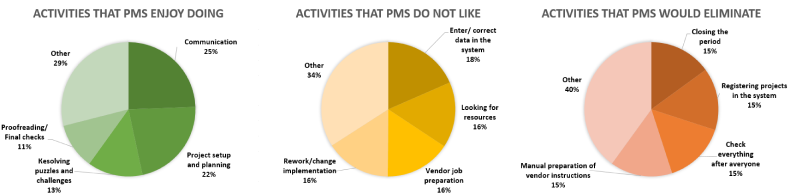

A couple of years ago, we conducted a survey among our project managers to find out in which area the use of machine learning could simplify and speed up their work. It turned out that the project manager spends quite a lot of time searching for the right vendor to do a particular job, and this is far from the project manager’s favorite activity. This was why we began experimenting with ML to select the best vendor for a project.

To begin with, we wanted to find out how realistic it was to train a neural network to make the same choice as human Project Managers. We took the initial data and assigned vendors from several tens of thousands of already completed jobs and used them to train a model. Although a variety of sophisticated neural network architectures are available, we chose a simple regression classification model, and it performed fairly well. Validation showed that the model made the right choice in more than 99% of cases. However, when trying to use this model’s output in production, we found that often selected vendors were in fact not suitable. This was due inter alia to the fact that in the past, work was often given to a vendor that was far from the best one for the job from today’s perspective. The principle of “Garbage in – Garbage out” in action.

Re-evaluation of PM activity landscape

Recently, we conducted another survey to understand on which activities managers spend the majority of their time in project management. In addition, it was interesting to learn in which other areas it makes sense to experiment with machine learning. This survey showed that the search for the best vendor for a project is still the number one task. It also became clear in which other areas to work.

The table below lists activities of project managers we are going to improve and ideas on how to partially automate this type of activity or completely pass it to the machine.

The system means our Project Management / Enterprise Resource Planning system.

| Manual activity | How to partially automate with ML | How to eliminate the manual activity |

| Looking for resources | Suggest resources (vendors, TM, glossary, etc.) from similar projects | Choose the best vendor for each job considering language pair, domain, qualification, experience, cost, availability, productivity, PM/reviewer feedback and other parameters. |

| Project setup and planning | Propose project workflow according to SLA and similar past projects, estimate task ETAs and critical path | – |

| Manual preparation of vendor instructions | Extract key requirements and draft structured vendor instruction based on them | – |

| Deadline monitoring | Estimate probability of not meeting deadline in real time based on historical data + PM alerts | A few control layers could provide reliable deadline control to eliminate manual efforts altogether |

| Correct data in the system | Background analysis of data consistency based on common patterns + alert responsible about possible wrong data | – |

Vendor suggestion 2.0

As mentioned above, the ML-based vendor suggestion worked, but often gave results which were wrong (or at least seemed to be “wrong” for some PMs). To resolve this, we decided to:

- Consider who is managing this project (and therefore receives a suggestion). This allows customization of ML output on a personal/department basis, thus, to continue re-using collected data across different PMs and eliminate “wrong” suggestions at the same time.)

- Reduce the weighting of records in the training/validation data set as they become obsolete. Thus, more recent data take precedence over data that is five years old, and the data older than five years is not considered at all.

The results of using this improved architecture have not yet been announced, but we already have a list of adjustments for the next iteration. Instead of a complete regular retraining of the model on the data set over the past five years, we will try to switch to reinforcement learning on the data that will come after the initial training of the network.

Apart from that, we can just compare a new project with completed past projects considering such characteristics as Client/Account, language pair(s), domain, SLA and a linguistic snapshot of text to be translated (hello Natural Language Processing!) And simply reuse resources from matching past projects where appropriate.

What else on the ML roadmap

Correct data in the system

While processing data contained in the records of completed jobs, we found a number of inconsistencies. Analyzing them, we realized that with a little extra effort it is possible to identify with a high degree of certainty such incorrect data, the existence of which also poisons the lives of project managers. Why incorrect data appears could be a wrong user action, an error in the program code or just a trivial typo. No matter what it was, at least we could identify it. And there is no reason not to do that.

So, the way to detect erroneous data could be either an extra analysis during a scheduled data processing task or a separate scheduled data analysis task. In both cases it’s supposed to identify cases that do not match a common pattern (with a regression model). Automatic correction of data that seem incorrect would be too risky. Therefore, it is enough to report a potential error to the responsible employee who can verify and fix it if needed.

Project setup and planning

Often at the start phase of a project such as quotation or project acknowledgement, it is necessary to determine a realistic project deadline. To get an answer to this question, we need to evaluate the deadline for each stage in the project workflow, calculate the critical path and add some time buffer for contingency. The estimated deadline for each specific task can be found by a neural network that was previously trained on data from completed tasks.

There is another option that would work for a quick preliminary estimation of the deadline: to train the network on the data of previously completed projects, taking into account the volume, language pairs, domain and other characteristics of the project. The estimate given by such a network will be approximate, but such an algorithm is much simpler to implement.

Deadline monitoring

For project deadline monitoring we can use the same approach as for project planning. However, when the project has already been launched and work is in full swing, we have much more data about it. For example, it is known which tasks have already been completed and which specific vendors are assigned to the remaining tasks. Accordingly, in this case, it is enough for us to evaluate the realistic completion time for the remaining tasks, calculate the critical path, add it to the current time to estimate the most probable project completion time and compare it with the agreed deadline. If the estimated completion time is earlier than the agreed deadline, we are happy – there is every chance to meet the deadline. And if it’s later, then by applying the difference to a suitable normal distribution histogram of the, you can roughly estimate the probability of still meeting the deadline (which in this case will definitely be lower than 100%).

Manual preparation of vendor instructions

Although preparation of clear, consistent and up to date instruction for vendors is an important part of the Project Management routine, it often ends up in copy-and-paste of client instructions which is crude and unrewarding. While maintaining a set of up to date instructions for all types of vendors (like Engineer, Translator, Editor, Proofreader, DTP specialist, …) involved in the workflow for a certain account is a good practice, this could be excessive or just not realistic in some cases.

Here to help us are such Natural Language Processing techniques as Information Extraction and Named Entity Recognition. Running them on a project description received from a client in non-structured format, we could get all those project properties (such as volume, language pair, account, project name, deadline and many more) extracted as separate values. Then we just fill in a structured instruction template with them to get a draft of the vendor instruction. And if you do not have a corresponding project created in your Project Management System yet, it may be a good idea to create a project based on the extracted project properties.

Build or buy

As technology moves forward, and the world becomes more and more digitalized, software development becomes more and more important to Linguistic Service Providers. However, complex, relatively new and quickly evolving areas like Machine Learning could still be too difficult to adopt.

To deal with this, a company may consider either building its own ML microservices with Python/R or use excellent cognitive services provided by such IT giants like Google, Amazon, Microsoft, or integrators like Intento.

| Machine Learning services provider | Pros | Cons |

| Local proprietary development | Better understanding of what happens under the ML hood Absolute confidentiality Better availability | ML dev team may be too expensive for a small/mid-sized company Development may take longer Product quality may be lower |

| Professional cloud service | Quick start Proven quality solutions Higher peak performance | Disclosing of data to a 3rd party More expensive in the long run |

Although the results of adopting ML could be amazing, they are accompanied with risks. So it makes sense to implement ML solutions as helpers in an operational environment but to keep a human eye on what they do until you can trust them and properly evaluate the possible risks.